Today in my head have been echoing the words application and soccer. The first is because we are in the rush of startups and the second is because Bolivar recently said goodbye to the “Copa Libertadores” (a South American soccer cup).

I remembered something I read when it finished the soccer world cup: Germany used Big Data to win brazilian world cup. Sap AG actually made what could have been my dream, put together two of my passions: soccer and algorithms. So the post today is dedicated to those two things that I enjoy so much.

What does Match insights do?

Redusers states: “Match insights analyzes video data from the cameras on the playing field and captures thousands of data points per second , as the position and the speed of the players. After processing the data, the coaches are able to get performance metrics of their players and send directions to their smartphones or tablets.”

Making an analysis of the previous paragraph in text and context

" Analyzes video data from the cameras on the playing field"

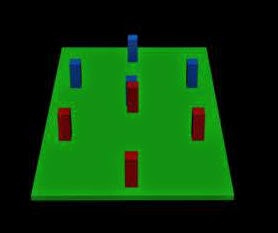

The image on the left represents a football game between the blue team and the red team. The picture doesn’t have much detail nor the full amount of players, but it serves as a representation.

The first way to analyze the movement of the players was the top view, but has the following drawbacks:

- Have the camera recording from the sky all the time could be very dangerous (for the camera) because of the ball. We could make the camera dodge it but then the image would move and we would lose valuable seconds of information.

- From this view all we could see are black and blond heads… not very easy to recognize who’s who after a headbutt or when they make a penalty kick. This view is not feasible.

The image below is another representation of the scheme from above but with a camera behind the red team goalie. With a program that detects objects through the tones of pixels, the program would tell us that there are three objects, which would be only three players from red team of this picture. In the best case the program would detect the blue pixels and assume that there are three blue players, but this information would also be erroneous. We would get a similar impression if we put the camera on the benches where the players are.

The image below is another representation of the scheme from above but with a camera behind the red team goalie. With a program that detects objects through the tones of pixels, the program would tell us that there are three objects, which would be only three players from red team of this picture. In the best case the program would detect the blue pixels and assume that there are three blue players, but this information would also be erroneous. We would get a similar impression if we put the camera on the benches where the players are.

Another option would be to place the camera on the south side where the people are. Because of the elevation, we could have something like the image on the left. But what happens? There’s always the fanatic who jumps with excitement of a gol (well, who doesn’t?), or the person asking permission to pass, or the falling confetti, or maybe the person pushing you (the camera also) unintentionally. In short, the movement of the camera and/or a few seconds lost. If we used the program that detects objects from the recognition of the tones of the pixels, we would have 7 players and not 8, since the blue midfielder would be covered by a red one. Perhaps the complexity of object recognition is not very noticeable here, but when there’s a penalty … all players are crammed into the bow. It is even difficult for me to recognize who is who.

And with that, wherever we put the camera, we would most likely run into the same problems or others not considered here.

Solution? Video mashup.

Example: Automatic mashup generation from multiple-camera concert recordings

A video mashup is the integration of several video recordings, with one goal: in the case of Match insights: have the most complete information, of the movement of the players.

If we had 360 cameras placed around the field, equidistant and at the same level, we could have that epic image when Neo (matrix) fights against the guards? of the other world (Here’s a video to have an idea.)

If a camera is blocked by an assistant for a few seconds, it would be enough to just interpolate the other cameras and complete the information. The software that helps to recognize patterns through pixels and, as far as I recall, has a learning engine is OpenCV .

“and captures thousands of data points per second , as the position and speed of the players.”

My digital camera captures up to 16 megapixels (16 million pixels), in my opinion I think the thousands of data points stayed short compared to a video recording :P. With the information obtained in the previous section we could analyze each player as an object and then make approximations of distance in time intervals; and with that measure its speed. Each player would be used as an object, regardless of its name. Once finished the match, with the recordings and the initial positions of the players, and having processed the recordings; we would then indicate that the X object was actually James Rodriguez, so to speak.

Where is the tricky part then?

We don’t have permission to put 360 cameras in those ideal positions :P But we DO have the television cameras that paid for the transmission, but also with “disadvantages” like our own cameras: When scoring a goal some cameras stop focusing the match to focus the emotion of the player who scored and the excitement of the fans, but that does not mean that ALL cameras will focus that/those image(s), others continue recording the field. In this situation one or two amateur cameras would help, but the hard part would be to calculate the necessary algorithms to integrate these images.

The submission of the information to the tablets and devices… hmmm… well, I leave that to your imagination( in the worst case I could send this information by email lol). I hope this has woken up your imagination and curiosity. Let me say you one more time that this is from my point of view and may be far from reality (though I doubt it).

**Cheers :) **

RizelTane